In this blog post, we will learn to create S3 Buckets using Terraform - Infrastructure as Code.

Table of Contents

● What is Terraform?

● What is S3?

● Installation of Terraform

● Installation of AWS CLI

● Configuring AWS CLI

● Create Working directory for Terraform

● Understanding Terraform files

● Creating Single S3 Bucket

● Creating multiple S3 Buckets

PreRequisites

● Installation of Terraform

● Installation of AWS CLI

● IAM user with Programmatic access

What is Terraform?

● Terraform is a tool to create , delete and modify the resources.

● Supported clouds such as AWS, Azure and GCP , IBM cloud etc.

What is S3?

S3 stands for Simple Storage Service.

Amazon S3 has a simple web services interface that you can use to store and retrieve any amount of data, at any time, from anywhere on the web.

Installing Terraform

1. Using binary package (.zip)

2. Compiling from source

From the link provided above, Download the suitable package and install it in the system.

To check the version of the terraform installed on the system, execute the below command.

terraform -v

Installing AWS CLI

The AWS CLI is a tool to create & manage sources in AWS services.

Install aws cli using the below command on the Ubuntu OS,

sudo apt-get install awscli

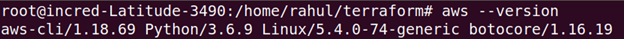

To verify the installation , Execute the below command.

aws version

Configuring AWS CLI

Authentication to AWS services can be provided through the configured AWS CLI profiles for the terraform templates.

Verify that required privileges are granted to the IAM user.

Provide the access key and secret access key and the aws region when prompted while executing the below command.

aws configure

Understanding Terraform Files

variables.tf:

A template / file which consists of security credentials such as access key , secret key and aws region.

What not to do with Access Keys?

Never use / hard code the credentials in a file

What should we do?

Configure AWS CLI profile with required privileges to be sued with terraform templates.

Then we will add AWS keys to /home/zenesys/.aws/credentials file.

Refer the profile in the terraform file to be used for authentication.

You May Also Like: Generating Free SSL Certificates Using AWS Certificate Manager

Providers.tf:

The Providers plugin will be installed in the terraform work directory to make the communication with the cloud providers supported by Terraform such as AWS, Azure and Google Cloud, IBM, Oracle Cloud, Digital Ocean.

Main.tf

A template which provides resource definition. The name of the file can be of any name.

Creating a Single S3 Bucket Using Terraform

Let's say you have a requirement of creating a S3 bucket.

In the provider.tf file,

provider "aws" {

access_key = "${var.aws_access_key}"

secret_key = "${var.aws_secret_key}"

region = "${var.aws_region}"

}

And the creds.tf file which holds the AWS credentials and lets the terraform to create the S3 bucket.

Configure an AWS profile to access the credentials

AWS Config

variable "aws_access_key" {

default = "PASTE_ACCESS_KEY_HERE"

}

variable "aws_secret_key" {

default = "PASTE_SECRET_KEY_HERE"

}

variable "aws_region" {

default = "ENTER_AWS_REGION"

}

Then create a file called s3.tf which contains the terraform template to create a s3 bucket.

The below script is used to create a single s3 bucket.

Bucket will be Private and Versioning enabled.

resource "aws_s3_bucket" "samplebucket" {

bucket = "testing-s3-with-terraform"

acl = "private"

versioning {

enabled = true

}

tags = {

Name = "Bucket1"

Environment = "Test"

}

}

The provided template will create a bucket named “testing-s3-with-terraform”,

Bucket is also tagged with Name and Environment.

We have referred to a bucket name in the template. If not, a random unique name will be assigned to it..

Run a terraform plan to perform the dry run.

Execute terraform apply to provision a s3 bucket.

We can confirm the bucket creation by logging into the S3 console.

Search for the bucket name,

Click the bucket name → Choose Properties, and check if versioning is Enabled.

To delete the resources created by the template, Run terraform destroy.

Creating Multiple S3 Buckets at Once

Using the below attached script , we will be able to create many s3 buckets.

variable "s3_bucket_name" {

type = "list"

default = ["terr-test-buc-1", "terr-test-buc-1", "terr-test-buc-1"]

}

resource "aws_s3_bucket" "samplebucket" {

count = "${length(var.s3_bucket_name)}"

bucket = "${var.s3_bucket_name[count.index]}"

acl = "private"

versioning {

enabled = true

}

force_destroy = "true"

}

In the above script , The s3_bucket_name variable will contain the lists of bucket names that you want to create in an array.

Default key specifies the lists of s3 buckets

Count refers to the ‘n’ of buckets to create using the s3_bucket_name variable.

Run terraform plan for the dry run and

Run terraform apply to create the buckets

Conclusion

With the help of terraform templates, we are able to provision S3 buckets.

Also read: Tracking S3 Bucket Changes using Lambda Function

Comments

Post a Comment